Ten years ago, a team of researchers at the University of Wisconsin-Madison began working together to develop an assessment tool that measured school-wide, task-based distributed instructional leadership practices. If that sounds complicated, that’s because it was. The research team may have anticipated the complexity of the work, but not many of the team anticipated that ten years later, the resulting work would not only still be on-going, but expanding and having significant impact on school and district-level leadership across the country and around the world.

What was originally called the School Leadership Assessment Tools (SLAT) Project was ultimately named the Comprehensive Assessment of Leadership for Learning (CALL). CALL has been used by over 500 schools to assess distributed instructional leadership practices to support school improvement and professional development planning. In addition:

- An exclusive version of CALL is used by WestEd to support their school improvement work in schools.

- A new version of CALL, the WIDA School Improvement System (WIDA SIS) is the only assessment and feedback system to measure and support leadership practices for enhancing instruction for English Learners.

- In addition to WestEd and WIDA, various organizations and agencies have used CALL to support schools including the Wisconsin Department of Public Instruction (DPI), the Georgia Leadership Institute for School Improvement (GLISI), the School Leaders Network (SLN), and multiple education service agencies.

- CALL has partnered with highly regarded universities such as University of Wisconsin-Madison, University of Connecticut, and Clemson University.

- Currently, CALL is part of a 5-year grant with East Carolina University from the US Department of Education.

However, ten years ago CALL was an idea, a vision of how to improve assessment and evaluation. CALL might contain many task-based survey items and feedback, but it also contains a history of healthy intellectual debates, numerous lengthy collaborative meetings, many conversations on what specific word would capture specific leadership practices, and countless “call” puns.

It is remarkable to look back on the past ten years and consider how this all began, and this oral history will do just that. This oral history of CALL tells the origin story of this unique research-based system and provides insight not only on how this tool came into existence, but also brings to light the collaborative process within the higher education context. Various people who were involved with the CALL Project since the beginning contributed to this oral history, including myself. I am the current Project Director for CALL, and I was involved in the project since day one. My narrative will be interwoven among the various recollections. Thank you to all who participated!

The Proposal: Education Leadership, Goal 5 (CFDA 84.305A-2)

In 2007, Rich Halverson and Carolyn Kelley, Professors of Educational Leadership and Policy Analysis (ELPA) at the University of Wisconsin-Madison, submitted a proposal to the Institute of Education Sciences (IES) in the U.S. Department of Education. Rich had developed a set of leadership rubrics informed by his work on distributed leadership. And Carolyn had just published a book, Learning First!, about instructional leadership practices.

Carolyn: We got the idea of applying for this IES grant and taking what we learned in our two separate research projects and trying to put something together that could be used in schools for leadership development and assessment.

Rich: One of the features of the rubrics that I had developed was a distributed leadership model, where we would focus on the tasks of leadership rather the characteristics of the leaders. And Carolyn was down with that as well. So that became our pitch: we could identify the tasks that mattered for improving teaching and learning for all kids. Carolyn and I had been talking about working together for a while.

Carolyn: We were both pretty excited about the work we were doing, and the opportunity to come together and build something that could be an extension of the work we had already been doing.

Katina Stapleton is an Education Research Analyst at IES and served as the Program Officer for the CALL research project. She recalled what life was like at IES during that time.

Katina: Our Education Leadership portfolio has remained relatively small over the years--I’ve always thought of it as our ‘little engine that could.’ In the early years, we were in a startup mode at IES and were trying to launch several different programs. CALL was funded in 2009, the first year we competed Education Leadership as an independent topic instead of as part of a larger topic focused on education policy and systems. At that time there was a notable gap in the field. If you were looking for a validated education leadership measure--formative or summative--you had to search high and low. What stood out about CALL was that it was a formative measure and pretty unique. That’s not something we were seeing a lot of at the time.

During the proposal writing and submission, Rich and Carolyn began assembling a research team, starting with Matthew Clifford, Researcher at American Institute for Research (AIR); and Steve Kimball and Tony Milanowski, Research Scientists at the Wisconsin Center for Education Research (WCER).

Matthew: At the time, 10 years ago, the federal government wasn’t providing a lot of support for tool development, particularly around leadership. And CALL was one of the few grants that got funded.

Steve: It was a thrill to have the grant awarded by IES. It was still in the early stages of IES putting out funding for this type of work. I thought it was timely, cutting edge; I thought the focus was on the mark—developing a system for formative assessment primarily.

Matthew: It was great. It was one of my first federal wins. That was a big deal for me in my career.

Perhaps the most distinguishing characteristic of CALL is its focus on Distributed Leadership. At the time of the IES proposal, this concept was gaining momentum in the education field, thanks in large part to the seminal work by Rich, Jim Spillane, and John Diamond. That was certainly at the forefront for consideration at IES when reviewing this proposal.

Katina: We were in a time where we just needed to know more about Distributed Leadership and its potential for improving student outcomes. And this project could give us the potential to adequately measure Distributed Leadership. Everybody was talking about how important distributed leadership was. But if you have no way of measuring whether or not it was happening then you also had no way of measuring whether or not it’s had an impact. Ultimately, our panel of peer reviewers thought this was the right proposal at the right time to add to our knowledge of Distributed Leadership.

Matthew: This was not something that had been done before. Distributed Leadership was something that was put together as a concept but at that point it was somewhat an ambiguous concept, and its definition was really debated. I think having a survey like CALL really brought the concept, at least in my mind…made it more clear to the field.

Want to learn more about CALL?

Building a Team

Once the proposal was funded, Rich and Carolyn served as Principle Investigators and hired graduate assistants to join the project. Those fortunate UW-Madison graduate assistants in the first year were Seann Dikkers, currently an Education Consultant and faculty at Bethel University; Shree Durga, currently a Senior Product Manager for Developer Tools at Amazon Web Services (AWS); and myself. I was entering my third year as a doctoral student in ELPA when Rich, who was my advisor at the time, met with me and asked me to join the project. When I learned that I would be helping to develop a survey to assess leadership…I wasn’t too excited. While I was certainly happy to be getting funding, these weren’t topics that I had anticipated exploring in my own research. Little did I know then that ten years later this would be the primary focus in my career.

Rich: I tried to get the smartest people I knew around the table to talk about what would be a good measure of leadership practice.

Shree: At the time, Rich was part of the GLS (Games and Learning Society). We were doing a lot in video games, the intersection of new technical experiences, digital experiences for learning. He was trying to bring those ideas into a leadership context, which was not straightforward.

Seann: Rich and I were sitting out by the Union, and Rich was talking about having better data for [school] administrators in light of how fantasy football players might have great data put in front of them, and that is a space in which you could make decisions. When [Rich and Carolyn] got the grant to move forward with CALL, he contacted me and asked, ‘Would you like to be part of this?’ As a grad student, you say ‘Yes’ to those things.

Shree: The sense was that this could be was a tool to have for diagnostic [purposes]. Like we have for health. And comparisons to health came up all the time. [Such as], you can log into MyChart and see a lot of information about what’s going on [with your health]. So why couldn’t you do the same thing for leadership?

The IES proposal’s goal was to develop School Leadership Assessment Tools (SLAT). And SLAT was the working title for the project…which thankfully didn’t last very long.

Seann (regarding the name): We spit-balled about 15 different things, and I remember CALL was one. I do remember throwing words next to letters for probably an hour and a half with the whole group. I might have said ‘Comprehensive Assessment of Leadership for Learning,’ but the whole group was there [and that’s what we ended up with]. Rich had an idea of the words that should be in there, and we just picked out those words and arranged them make them spell something.

And thus CALL was born.

The evolution of the CALL logo from 2009 to 2019

The evolution of the CALL logo from 2009 to 2019

Early Memories

With the research team assembled, it was time to build what was proposed: a task-based leadership survey. As we say in CALL: it was time to focus on the work.

In the first year, we looked at the Halverson Rubrics and worked to create individual, task-based items based on the practices captured in the rubrics. If we were only rating a practice based on a Likert scale-type process, the task would not be overly complicated. What we were attempting to do, however, was capture a unique potential practice for each response option for each item. This approach increased the degree of difficulty tenfold (at least).

Steve: The challenge was how to capture [practices] in a survey. That was a lot of work.

Carolyn: The strongest memory I have is the intensive meetings we would have for many hours to work through and capture what our research-based understanding was of leadership and translate that into an instrument that could assess levels of practice.

Steve: Fleshing out the rubric was certainly challenging. Developing the actual items and the language was quite a bit of a challenge…in particular because it was such a unique, I think, approach to a survey. Most surveys are framed in a way to get you your basic Likert-style responses; this survey was more of a qualitative survey, with the responses having qualitative implications, not necessarily something that can be quantified in an easy way.

Tony: It was quite difficult to think through what the constructs were and what the right questions would be to ask about the constructs. Intellectually, it was a fairly rigorous thing to do, right?

Matthew: The debates we had around those items that made up CALL…the backdrop of that was what really is distributed leadership and how is it manifest in schools? Who does what and how do they pull together?

Shree: I remember we had a process. We would write ideas on the board, and we would split up in groups. ‘Is it saying what we want it to say?’ ‘Is it too long to read?’ ‘Do the options make sense?’ ‘Are we repeating the question?’ The rigor behind it!...I still try to bring that aspect to even small things that I do. And that makes for a better tool.

Carolyn: The hours and hours [Mark] and I spent in a room together grappling over trying to make everything consistent, looking at the language, trying to reflect what we thought to be the language of practice.

Me [Mark]: Yeah we brought new meaning to the term word-smithing!

Carolyn: And collaboration too! We probably spent more time together than with any other human being!

Seann: I remember doing a lot of word-crafting on the survey items, especially on the varying degrees of effect that we were putting before the administrators when they made their selections.

Shree: No meeting I remember not having whiteboarded. We always used the whiteboard for visualizing. Eventually we wanted it to be a tool, right? Digitally accessible interactive software, so I think visualizing those aspects helped. Everyone would walk up to the white board and start drawing things…

Rich: I still remember those initials years of work as some of the most interesting and engaging work that I’ve ever done as a professional, trying to reconcile all these different perspectives into a good product.

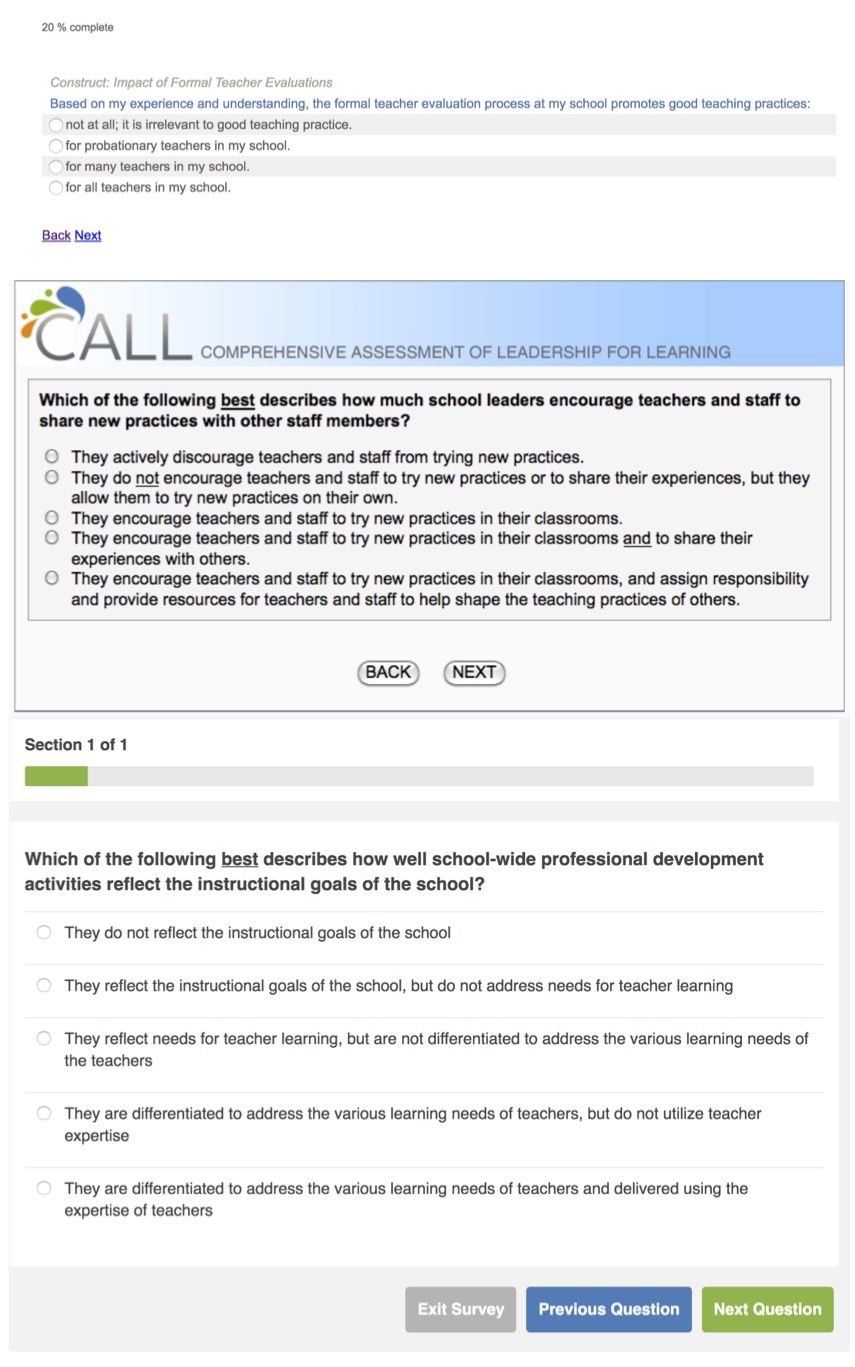

The design of the CALL Survey items changed over time both in terms of phrasing of the question stems and responses as well as the visual presentations.

The design of the CALL Survey items changed over time both in terms of phrasing of the question stems and responses as well as the visual presentations.

Survey Complete…What do People Who Actually Do This Work Think?

Once the CALL Team constructed version 1.0 (a paper version), we engaged in some content validity work through an expert panel review. We invited expert practitioners of various roles to gather and review the instrument. Engaging in this work was the first indication of where CALL would end up: a useful, practical tool for practitioners in schools. Two of those panel experts would be involved with CALL in the years following this panel work: Mary Jo Ziegler, (at the time) Literacy Coordinator in the Madison Metropolitan School District (MMSD), and Jason Salisbury, (at the time) graduate student and former high school special education teacher. Today, Jason is an Assistant Professor at the University of Illinois-Chicago, and Mary Jo is the Senior Director of Curriculum and Coaching Center for Excellence at CESA 2 in Wisconsin. Jason could speak to the impact the panels had on CALL as an instrument; he joined the project as graduate assistant the following year.

Rich: We would hammer away, trying to come up with items in domains, to take the rubrics and turn them into practices, and then share them with our community and make sure that we got the practice right, and more importantly that we got the language right, so that we weren’t alienating people with ‘researchy’ language.

Jason: [The panel consisted of] a diverse group of roles and responsibilities. There were teachers, CESA people, principals, assistant principals…I remember there was a lot of good conversation. We were all able to draw on different ideas and conceptualizations of school leadership. That provided some good information for the assessment.

Mary Jo: There were educators around the table with different roles and perspectives who had deep knowledge about schools and how they worked. We shared our thinking and our experiences around effective instruction and leadership. It felt like a [Professional Learning Community], like a collaborative conversation around best practices for supporting student learning.

Carolyn: The extent to which building an instrument and having really great practitioners look through it and review it and argue about it and kind of grapple with what practice looks like was an incredible window on practice.

Tony: It’s challenging to get practitioners to think really analytically and specifically. They are involved in the day-to-day work; they have developed a clinical mentality based on their own experience, and it’s hard for them to step back and say, ‘ok does this item measure some abstract concept?’

Tony’s perspective represents the overall challenge that this work presented: the effort to quantify qualitative information. To be sure, this project was not without its challenges…

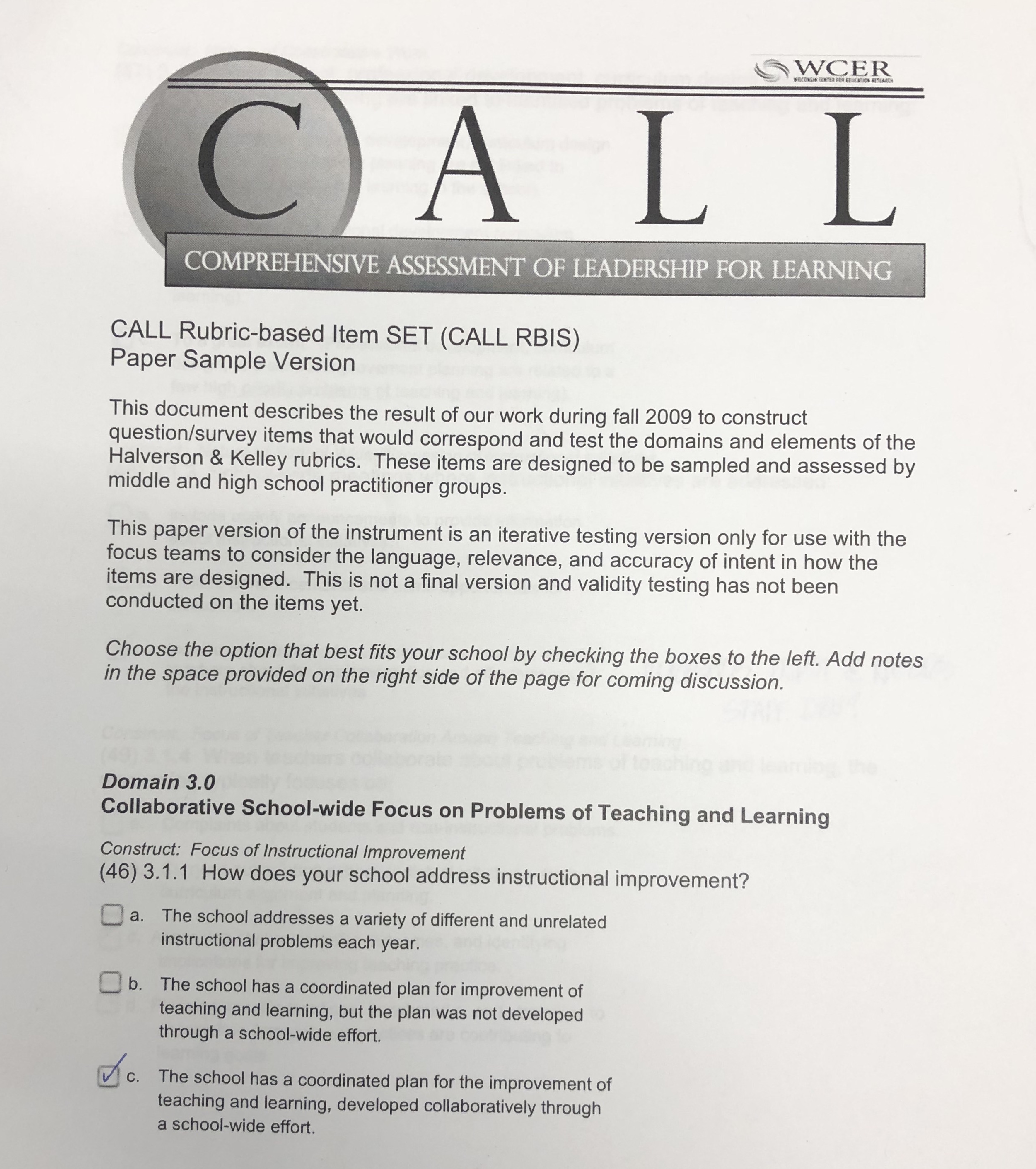

"CALL Survey 1.0": A paper version used by expert panelists for content validity work in Year One.

"CALL Survey 1.0": A paper version used by expert panelists for content validity work in Year One.

The Challenges We Welcomed and Overcame

As Theodore Roosevelt said, “Nothing worth having comes easy.” CALL could not have been a successful project without some hurdles along the way.

Rich: One of the challenges was fitting a formative tool into a summative box. All of the discussion [in education policy and research] was ‘how do we judge leaders?’ Our insight was, ‘how do we support leaders?’ I think we threaded that needle pretty well.

Carolyn: A big challenge was that practice is complex. The practice of one person is complex, and the practice of a system of people in a school is even more complex.

Jason: I remember thinking [CALL] was different. It wasn’t a normal, kind of traditional survey. But I also remember thinking that made sense, because leading a school is complex and complicated work.

Matthew: In some ways as a team we were fumbling around, trying to come up with some items. And some of those meetings got quite heated. Who would be the audience for the survey? How would it be used? That was a big topic. And also, what the items should look like, how they would appear, and then how would we create a user experience that was really worthwhile?

Jason: There were debates in the room. Different people had different feelings on what should be present on the instrument. They were healthy debates on the work and what should be captured in the work.

Rich: We had an ongoing debate about the psychometric properties of validation. I wanted the survey itself to be formative. So I did not want a Likert scale. I did not want the 1-5 ratings that would be psychometrically appropriate, but [in terms of practice] meaningless. So I did not want a survey where you got a “3.2,” and you didn’t know what the “3.2” meant. Because that fed into the disillusionment that educators were having with assessment [in general]. I really wanted to be able to say, ‘You got a 3 on this [item]? Just look at the item and see what a 4 is.’ To make the survey itself formative…

Steve: There are multiple dimensions of validity, content validity being one of them, but also how the information is being used. The reliability piece, yeah, that was something I was puzzling over based on how the responses were constructed.

Tony: The more specific you make a survey, the more you make it about: ‘I do X’…so you make X real specific and behavioral. And if it doesn’t have any connotation to it, the more reliable it’s going to be. But it’s very hard to make an item like that without having 1000 items because there are so many different ways to be effective as a school leadership team.

As someone who is currently working to get CALL into schools and in the hands of school and district leaders, and also as someone who was part of the development and validation work for CALL, I have experienced tension, to some extent, between validity in the eyes of the researcher and validity in the eyes of the end user. The CALL Research Team would spend months discussing the validity and reliability properties of the CALL constructs, but those measures would matter less and less the more it was considered by practitioners. Moreover, because CALL is formative in nature, and because it was intended to be used to support school leaders, enhancing the practical nature of CALL became paramount.

Platforms that Carried CALL to Version 2.0 and Beyond

After the first year of survey design and development, it was time to program the survey and start to pilot, refine, and engage in a large-scale validation process. The CALL Team looked at survey platforms that we could have acquired through the Wisconsin Center of Education Research (WCER). However, after some consideration, Shree Durga was called upon to program the first digital version of CALL.

Shree: We were trying to improvise with the survey software [WCER] had, but it wouldn’t do everything we wanted it to do. The default, at the time, was that you buy software and install it on your computer. That was not an easy, obvious solution.

At the time, most people were still predominantly using desktop or laptop computers rather than handheld devices, but Rich anticipated that would not be the case for very much longer.

Shree: At first we were looking at PHP and other existing software, but none of those were digital or web applications. And now that seems like dinosaur age, because everything at that time was desktop-centered. We wanted [CALL] to be accessed anywhere. That’s when we decided it had to be a web application.

Shree built her own survey platform, which we used in our year two pilot of CALL. We gathered a great deal of valuable feedback from the pilot users; however, in anticipation of years three and four, in which we would conduct a large-scale validation study, we began working with University of Wisconsin Survey Center which provided a rigorous review of the instrument and a survey platform for large scale implementation.

Jason: The Survey Center was huge. If we hadn’t gotten the survey Center involved, the instrument wouldn’t have been as user-friendly: identifying double-barreled questions, for example. Getting the Survey Center involved was a pretty important decision that was made along the way.

Rich and Carolyn met with John Stevenson, Director of the UW Survey Center, to see how the Center could support this project.

John: [Rich and Carolyn] had a deep respect and appreciation for the knowledge and methodological information that we were going to bring to help the project. They saw an entrepreneurial opportunity to serve the people they’ve spent their lives studying and caring about. I meet people who have entrepreneurial interests, and they are not always as likeable (laughs) to be perfectly honest.

Considering Design Features: CALL Becoming a Product

Today, we talk about CALL as being more than a survey. The goal now, as it was then, is to provide school leaders with action-oriented data that they can apply to their practice immediately. In this era of data-driven leadership, education leaders are often swamped with data. If the data are not presented to these practitioners in a user-friendly way, this process of using data to inform decision-making processes would likely be counter productive. Knowing this, it was a priority of the CALL Team to create a feedback system that would support those who are being assessed, and not just those who are doing the assessing.

Seann and Carolyn focused a great deal on the feedback that would be provided in the CALL Reporting System. The feedback within the current system was informed by the validation work during the research project.

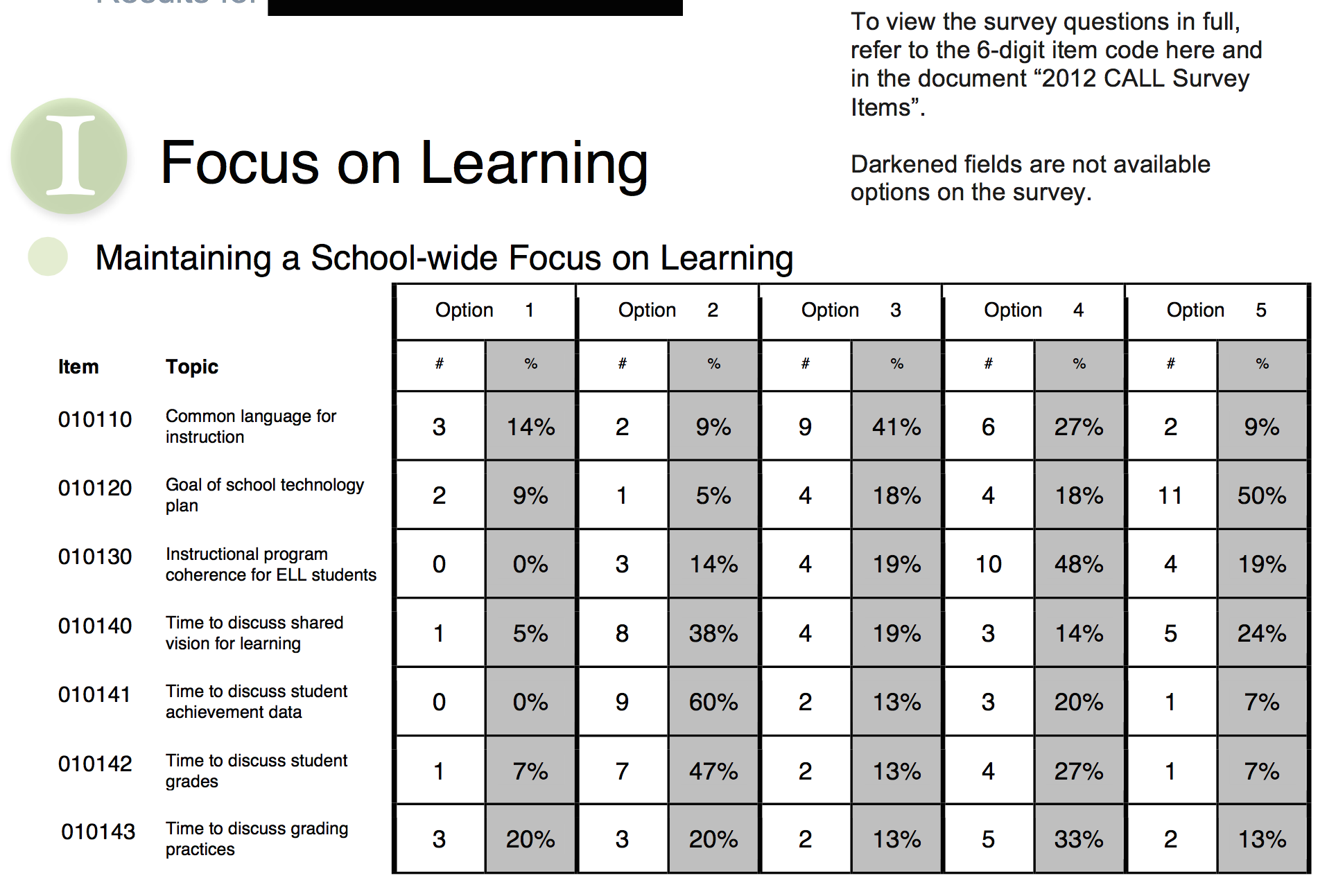

The fist "CALL Feedback System." Pilot users received a pdf with data tables like this with convoluted directions on how to understand their results. Clunky as this was, this encouraged school leadership teams to work together to examine their CALL data and in turn their leadership practices. And, this informed the design of the current item-level reporting system (see below).Seann: We gave [school leaders] a sample CALL survey for them to work on, and at the time we didn’t have a way to kick the results back to the administrators. Part of what we wanted to see was how did they reacted to it. As administrators, where do they go first? When we gave them [their results], what were they looking for? That would tell us where those things needed to be in the feedback system.

The fist "CALL Feedback System." Pilot users received a pdf with data tables like this with convoluted directions on how to understand their results. Clunky as this was, this encouraged school leadership teams to work together to examine their CALL data and in turn their leadership practices. And, this informed the design of the current item-level reporting system (see below).Seann: We gave [school leaders] a sample CALL survey for them to work on, and at the time we didn’t have a way to kick the results back to the administrators. Part of what we wanted to see was how did they reacted to it. As administrators, where do they go first? When we gave them [their results], what were they looking for? That would tell us where those things needed to be in the feedback system.

During the validation work, Marsha Modeste joined the CALL Team. Then a UW-Madison Graduate Assistant, Marsha is now Assistant Professor at Pennsylvania State University. She continues to use CALL in her research.

Marsha: I remember our conversations about trying to make [CALL] as user-friendly as possible. We wanted this to be a tool that, first, practitioners and schools could use, and that would inform their own practice and augment their own practice. We were thinking about design. So in addition to the rich content and in addition to the conceptual framework, the visual and design aspect of the survey was something that we talked about was equally important, because you didn’t want to turn folks off by having a clunky design or a clunky interface.

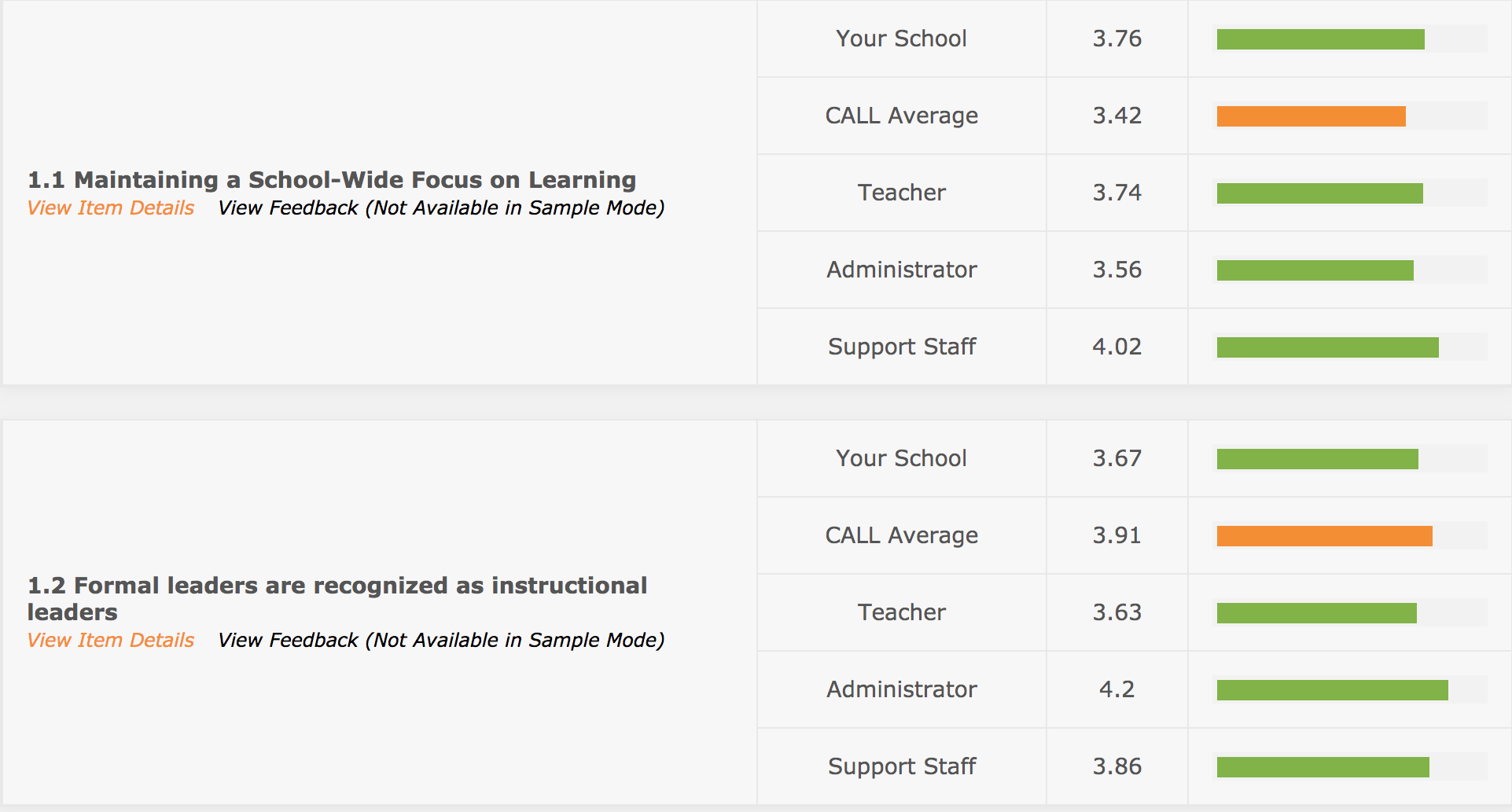

From the beginning, given CALL's focus on individual tasks for school improvement, the greatest value in the system was always going to be at the item-level. The current CALL reporting system reflects that thinking and design approach.

From the beginning, given CALL's focus on individual tasks for school improvement, the greatest value in the system was always going to be at the item-level. The current CALL reporting system reflects that thinking and design approach.

CALL Today

Ten years later, CALL is going strong through various iterations, enhancements, and partnerships. School Improvement organizations use CALL to support schools across the country. Researchers use CALL data to learn more about school-wide leadership. And Rich and Carolyn told the story of CALL in context in Mapping Leadership: Tasks that Matter for Improving Teaching and Learning in Schools.

Jason: There is still meaningful research coming out of [CALL]. Marsha [Modeste] is up and running with her [research], and internationally, too. And we had that piece come out in the Journal of Education Administration. So it’s still impacting research and practice, and that’s pretty cool to see.

Marsha: One project that I started at Penn State that I am leading right now is around this question of dynamic partnership. What I thought would be a really cool study would be to see if this was a construct that I could develop and test and then learn more about. CALL has been a part of that. I essentially developed a construct called Dynamic Partnership and used the CALL framework to learn more about the distributed leadership practices that are already occurring in a set of schools.

As a practitioner, Mary Jo Ziegler was involved in CALL through the early stages of development and validity testing. While she worked as a Title I Consultant at Wisconsin DPI, she wanted to use CALL to support Focus and Priority Title I Schools.

Mary Jo [on using CALL with Title I schools]: A big part of what they were required to do as Focus Schools was to create a Continuous Improvement Plan. Often, the only data they had was summative academic data to base those plans on. What we saw were plans that were often superficial, and were kind of taking shots in the dark as to what might have an impact. And then it would be a full year before they would get any kind of feedback on whether there was growth. And if there was any growth, they had no idea about what really caused it. Giving them [CALL as a] source of data--it gave them more actionable and more responsive targets, things that you can actually do something with in the short term and see some positive results.

Jason: Even to this day, when I interviewed for my [current Assistant Professor] position, the fact that I was involved in CALL, mattered. People know CALL.

In 2013, the CALL research project concluded. In most research projects, the project work will cease unless researchers are be able to obtain a new grant to keep the project going. However, the CALL Team took a different approach. Based on the experience of participants in the CALL project, we thought this could be something that schools might pay to use…if we could transition to a commercial product.

CALL as a product: the first online reporting system developed by LGNWe worked with an organization called Learning and Games Network (LGN) who developed the first commercial CALL product. Soon after, a relatively new non-profit organization, the Wisconsin Center for Education Products and Services (WCEPS) took CALL on as a start-up business. I came with it as Project Director. WCEPS is a non-profit organization that works with the UW School of Education to take innovation that is coming from there and help to turn it into small businesses and start-ups. Matt Messinger is the Director of WCEPS, and he brought CALL into WCEPS as one of the first projects in its portfolio.

CALL as a product: the first online reporting system developed by LGNWe worked with an organization called Learning and Games Network (LGN) who developed the first commercial CALL product. Soon after, a relatively new non-profit organization, the Wisconsin Center for Education Products and Services (WCEPS) took CALL on as a start-up business. I came with it as Project Director. WCEPS is a non-profit organization that works with the UW School of Education to take innovation that is coming from there and help to turn it into small businesses and start-ups. Matt Messinger is the Director of WCEPS, and he brought CALL into WCEPS as one of the first projects in its portfolio.

Matt: The [CALL] creators, Rich, Carolyn, and the entire team are innovative and were open to trailblazing with us at WCEPS which was much appreciated, to say the least! There were several reasons why WCEPS worked with CALL. It's a valuable tool that can help schools succeed. The tool gives specific steps, by virtue of the answer choices, that schools can take to improve instruction. This is valuable! [And,] CALL is an online tool we could adjust so that was another reason. As schools adopt more Ed-tech, [WCEPS] wanted to get into that space as well.

Katina: Part of what we do at IES is support the production of evidence, but evidence that stays in a journal and products that are shelved are not necessarily that helpful. What was exciting to me was that not only was the research portion of the project successful, but once you had evidence that CALL was a valid measure you were able to commercialize it and get it out into the field.

Carolyn: I didn’t think that [CALL] would be where it is today. We didn’t have a vehicle for this when we started the project, a WCEPS-like vehicle to continue the work and to get it out. I had never been part of a project that had a life beyond the grant really…unless you got another grant.

“Re-CALLing” the Times When…

As we approach the year 2020, we can take the opportunity use hindsight and reflect on our work then and on CALL what has become today. A question that CALL Team members and contributors considered: Ten years ago, what did you think would become of CALL?

Jason: To be honest, at the time, if you would have told me then that ten years later [Mark] and I would be talking on the phone [about CALL], and this thing is going to go out on its own, and that WCEPS was going to get involved, I wouldn’t have believed you. Not that I didn’t think the work was valuable…but I didn’t think it would have the staying power that it’s had.

Steve: I certainly didn’t envision it as it is today. The crafting of the feedback based on the results, the real time opportunity and the technology involved with it for providing the feedback. I didn’t think of that—I thought of it more as a VAL-ED type thing: ‘Here are your results, and here are some possible things to focus on.’

Matthew: I always thought that CALL would be the forerunner on distributed leadership measurement. And then providing feedback to principals about what areas in their building were pulling in the same direction, and which ones were pulling against the tide, sort of.

Shree: At the time, I was treating this as another research project. We didn’t use the word “product” at the time. I certainly didn’t envision it to materialize in the way it has. That has been very pleasantly surprising to me.

Steve: Frankly, I was a little cautiously optimistic, or maybe a little…a tinge of doubt on whether we could create a qualitative survey that could be backed with reliability and validity. I didn’t see how that could quite work out. But it did. So I was wrong. I didn’t totally doubt it, I was just a little bit…a healthy skepticism, shall we say.

I think about CALL every day. And it was fun to talk to my former colleagues and have them reflect on this work from ten years ago. They each brought a unique perspective to this oral history, just as they brought unique contributions to the original project. And while there was a healthy amount of disagreement along the way, we all look back fondly on that experience.

Shree: That was a fun part for me. I was learning a lot from the leadership literature side of [this project] because I didn’t have a lot of background, and that was not necessarily my area of focus in grad school. It was pretty cool to see folks geek out about that!

Tony: [I remember] the energy with which some folks really pursued this…You guys really put a lot of energy into this and were really revved up about it!

Jason: The power dynamics, from professor to grad student, were as flat as they could be. It wasn’t as if Rich, Carolyn, or Eric [Camburn] were running the show. We as grad students—we could say what we wanted to say or make a comment or offer a suggestion. There wasn’t an expectation that we would agree with Rich or Carolyn.

Seann: I felt incredibly fortunate to be on a great team, with a great group of people. It was an absolute learning experience. I feel really blessed that I was on CALL. I think back on it with fond memories.

As for the contributions to the field, those are ongoing:

Mary Jo: [As an educator], I don’t remember ever having my district, any district that I ever worked in, ask me to evaluate the effectiveness of the way the school or district was being organized and run...actually being able to give my input on how it might be improved. [CALL] really dug down into specifics and gave really high quality information from everyone on the staff, their perspective on some critical pieces of what impacts student learning.

Matthew: The other thing that was nice and somewhat unique about CALL was that it focuses also on education models that bring students, regardless of ability, together in a school. That was something that was injected all the way through. Those inclusion models—we still struggle to do those. And I think [CALL] kind of points people in the right direction.

Marsha: What I appreciated about CALL, and what I still do appreciate, is that it focuses on practices, on leadership practices. And it recognizes that multiple people engage in this work throughout and across an organization.

Rich: I thought that we could redefine how leadership is measured in the United States. But I underestimated the power of ideology of accountability. And I think the rest of the country was not that interested in measures that would support [school leaders]. They were interested in measures that would punish. And we did not change that argument. But we did succeed at our goal! And if the national conversation turns toward support…we are still here.

Want to learn more about CALL?

The first CALL brochure. A lot has changed since this brochure was created: 1) none of the urls shown here work anymore, 2) we do refer to CALL as a 360 degree survey since it does not focus on an individual person, and 3) the logo .

The first CALL brochure. A lot has changed since this brochure was created: 1) none of the urls shown here work anymore, 2) we do refer to CALL as a 360 degree survey since it does not focus on an individual person, and 3) the logo .

However, to be sure, CALL is still "an online formative assessment and feedback system designed to measure leadership for learning practices."